Mudra - A Unified Multimodal Interaction Framework

Multimodal interfaces have become an important solution in the domain of post-WIMP interfaces. However, significant challenges still have to be overcome before multimodal interfaces can reveal their true potential. We addressed the challenge of managing multimodal input data coming from different levels of abstraction. Our investigation of related work shows that existing multimodal fusion approaches can be classified in two main categories: data stream-oriented solutions and semantic inference-based solutions. We further highlighted that there is a gap between these two categories and most approaches trying to bridge this gap introduce some ad-hoc solutions to overcome the limitations imposed by initial implementation choices. The fact that most multimodal interaction tools have to introduce these ad-hoc solutions at one point confirms that there is a need for a unified software architecture with fundamental support for fusion across low-level data streams and high-level semantic inferences.

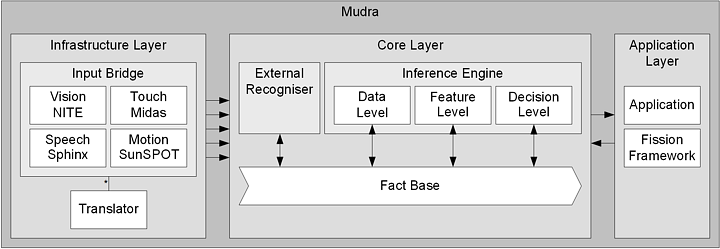

We presented Mudra, a unified multimodal interaction framework for the processing of low-level data streams as well as high-level semantic inferences. Our approach is centered around a fact base that is populated with multimodal input from various devices and recognisers. Different recognition and multimodal fusion algorithms can access the fact base and enrich it with their own interpretations. A declarative rule-based language is used to derive low-level as well as high-level interpretations of information stored in the fact base. By presenting a number of low-level and high-level input processing examples, we have demonstrated that Mudra bridges the gap between data stream-oriented and semantic inference-based approaches and represents a promising direction for future unified multimodal interaction processing frameworks.

Related Publications

Status: Finished