Expressive Control of Indirect Augmented Reality During Live Music Performances

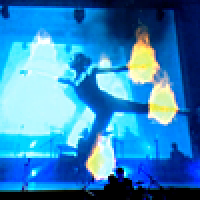

Nowadays many music artists rely on visualisations and light shows to enhance and augment their live performances. However, the visualisation and triggering of lights in popular music concerts is normally scripted in advance and synchronised with the music, limiting the artist's freedom for improvisation, expression and ad-hoc adaptation of their show. We argue that these limitations can be overcome by combining emerging non-invasive tracking technologies with an advanced gesture recognition engine.

We present a solution that uses explicit gestures and implicit dance moves to control the visual augmentation of a live music performance. We further illustrate how our framework overcomes limitations of existing gesture classification systems by providing a precise recognition solution based on a single gesture sample in combination with expert knowledge. The presented approach enables more dynamic and spontaneous performances and - in combination with indirect augmented reality - leads to a more intense interaction between artist and audience.

We present a solution that uses explicit gestures and implicit dance moves to control the visual augmentation of a live music performance. We further illustrate how our framework overcomes limitations of existing gesture classification systems by providing a precise recognition solution based on a single gesture sample in combination with expert knowledge. The presented approach enables more dynamic and spontaneous performances and - in combination with indirect augmented reality - leads to a more intense interaction between artist and audience.

Publication Reference

Hoste, L. and Signer, B.: "Expressive Control of Indirect Augmented Reality During Live Music Performances", Proceedings of the 13th International Conference on New Interfaces for Musical Expression, Daejeon (NIME 2013), Korea Republic, May 2013

WISE Authors

Related Research Topic(s)

- Interactive Paper and Augmented Reality

- Cross-Media Information Spaces and Architectures (CISA)

- Mobile Applications

- Augmented Reality